I have been thinking about bugs, responsible disclosure, crowd behaviors and ethical responsibilities. This blog is a choose-your-own-adventure game for security researchers. I want to share some simple yet common scenarios with you, and outline our various options as responsible computer scientists.

My main point will be that there are no good options: no matter what you do in the choose-your-own adventure game, everyone dies. The trolley, the fellow at the switch, the folks on the line, and the folks on the other line as well. And they die because we are, collectively, acting like morons. You and I and everyone else is complicit. This post is an attempt to ask that the crowd learn to demand the right kind of assurance from software, and the right kind of behavior from all the parties involved, because we can do better.

But what if he really did?

BTW, as you read the scenarios outlined below, I'm sure you'll be convinced that I'm criticizing some specific coin or project, except no two readers will agree on which coins. This post is not about The DAO or the-coin-which-cannot-be-named-or-else-they-conjure-a-butthurt-online-brigade and also no-not-that-one-the-other-one or even oh-my-god-they-all-do-that. It's about all of us. The entire set of scenarios are synthetic -- an amalgamation of Sorry-For-Your-Loss (SFYL) events I've seen play out in cryptocurrencies over the years. No need to make it personal, it already is.

Imagine that there is a software project out there, developed by some group that is not you. Let's assume that they have found a way to monetize this process. Maybe they are offering consulting services, maybe they are profiting directly by building a cryptocurrency and selling some tokens, maybe they are directing users to side services that they operate to finance this process. Somehow, they are making money off of this thing.

You, of course, are not making money off of their thing. You have no connection to them, no legal obligations. This post is about your ethical obligations, the things you have to do so you can sleep well at night and occasionally visit your relatives' graves without feeling like an utter disappointment.

Now imagine that you know of a fundamental flaw in this project.

What do you do?

Before you answer, let's fill in with some realism.

Let's add some details about you, with absolutely no loss of generality. The following backstory is almost always universal.

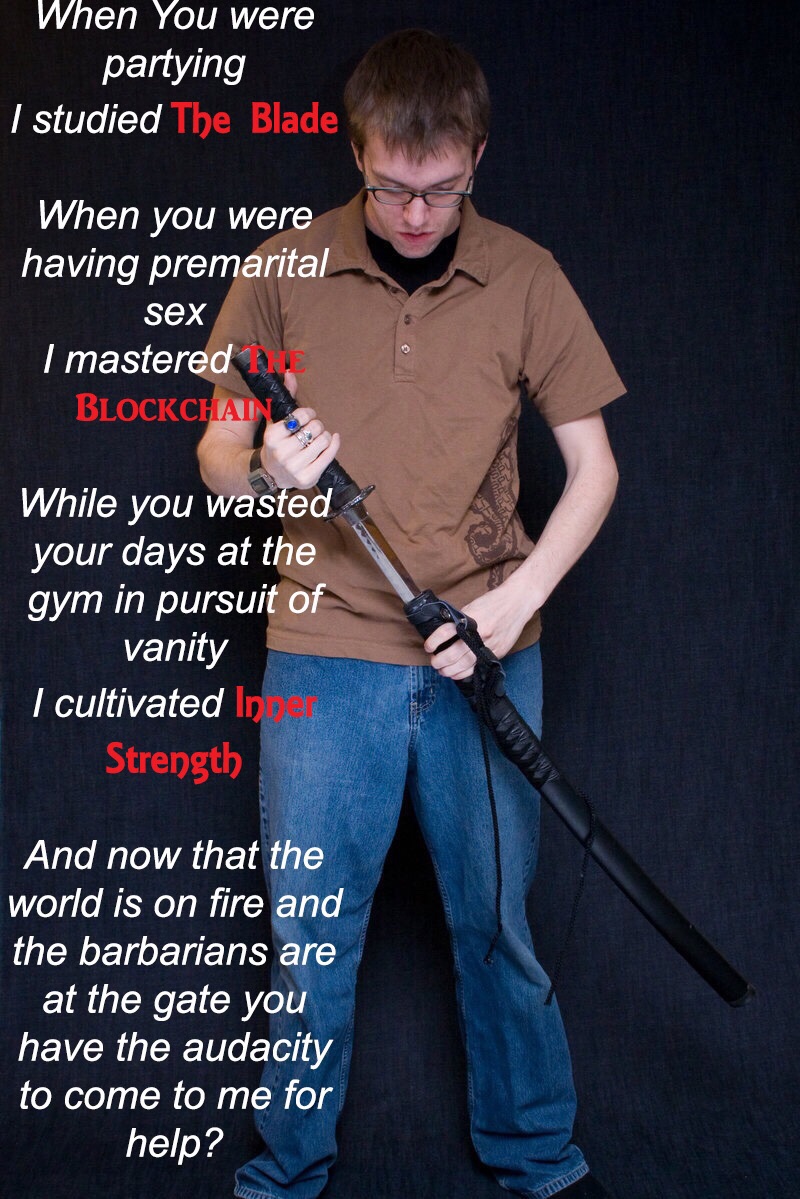

You didn't just happen to chance upon this flaw. No one just chances upon a flaw that has eluded the main developers who have a profit motive to ferret out the bugs. You spent years of effort, building specialist expertise. When the developers were throwing a pre-pre-launch party, you were combing arcane papers by Lamport. When the dev team was out making it rain ICO-cash in the club, you were stepping through similar code with gdb. And when they were out having "pre-marital sex," as the meme goes, you were thinking about techniques to avoid this particular flaw.

But let's be realistic: you don't actually know for certain that there is a flaw in this specific system right at this time. Sure, you're an expert at this problem, sure, it certainly feels like they have it, but you have not (yet) combed through this particular system. You have a 99% suspicion that they might have this flaw. But there's a 1% chance that they have some other mechanism in place that makes it impossible to exercise the flaw.

Again without loss of generality, the following is almost universal.

The developer team was working in a hot area with a lot of competition. They could have spent an extra year or two on their project to make sure it is well-executed. But, ya know, the space is really competitive. Time is short, besides, the Hooha Coin has a whitepaper that sounds exactly alike, with phrases lifted directly and updated with additional buzzwords, so there is no time, they need to hit the market.

The developer team sought advice on business development from successful role models. This really means people who happened to be at the right place at the right time, who have now cashed out and style themselves as successful VCs. As of 2018, it was commonly accepted wisdom to "fake it 'till you make it." They literally told young people to engage in fraud.

Some people on the dev team had been through business school. The main thing they learned in business school was how to network, but the second thing they learned was how to cut corners, how to short-change people on implicit expectations and pocket the difference. There is no law to hold you back from going to market with a possibly broken protocol as long as you yourself do not actively know, for certain, that it is definitively broken.

Finally, the dev team has followed best practices, as taught in school. They used Mongo but they made the port non-public [1] . They did not invent their own crypto. At the risk of going on a side-rant: what is it with disciplines that teach people to stay away from their discipline? Why is it that cryptographers get to tell people to leave cryptography to others, but somehow it's OK to build your own consensus protocol, "eventually-consistent" NoSQL engine that is actually plain old inconsistent, or your own programming language with weird "wat?" semantics? Anyhow, the dev team did whatever it is that we teach at your average top-10 school, the end result of pointless faculty meetings and that special out-of-touchness that comes from the lack of a mandatory retirement age for faculty in the US.

We can now see what happens when you speak up, or don't. In what follows, bold indicates options, NMD means "No More Decisions" down that path, The End means the book ended and everyone lost.

You watch the system get deployed with great fanfare. Someone else finds the same flaw. [Restart, from their perspective]

The weather is really nice in Thailand. You tell yourself that 2 out of 3 people would take advantage of a serious exploit instead of reporting it if they could get away with the proceeds. 1 out of 5 would use an exploit if it made them $1 more than the bounty.

The border crossings into the US to see living relatives cause a slight feeling of anxiety, but you reassure yourself with "Code is Law, bro." You occasionally get the feeling that your dead relatives are judging you hard, but the hotel staff know this and keep you well supplied with Mai Thais. [The End]

You mention on Twitter that the protocol may be buggy. What happens next depends on your reach.

You have no following. No one pays any attention to you. The bug is exploited by an address connected to other hacks laundered on BTC-e, and everyone loses money. You are an utter disappointment. Doubly so for not exploiting the hack yourself. [The End.]

You seek help from someone with a large following. He somehow read your nicely composed letter among the dozens of kooky messages they get every day, some involving a guy who sold his heater and wants ICO recommendations for his $150, and many involving whitepapers with the exact same bug as what you disclosed. Now he knows what you know, and has a large following. [Restart and follow along from his perspective].

You have a large following. Crowds upvote your post. It makes it to the top of Hacker News. You get 100,000 viewers, approximately 10 times the scrutiny in one day that a typical, award-winning faculty member gets over his or her life's work.

That one guy who is upset at you because you previously pointed out his errors posts something snide. Crowds vote it up because they like drama, and he has numbered references that lead to non-sensical web pages and IRC logs that do not actually support whatever inanity he is spouting. Even though no one actually clicks on the links, a blue link is considered a full refutation regardless of its destination. You ignore him and all the idiots who upvoted him. [NMD]

The aura of negativity created by the previous guy has worked. A friend posts in the HN thread and joins ranks with him. She drops in a snide reference to something personal, like your ethnic origin. You ignore her, knowing that she will come before you in a few years seeking a job. You contemplate what you'll do then: will you remind her of this, or will you never speak of her behavior? You now have two ethical dilemmas. [NMD]

Ok, the developer team goes into overdrive, immediately denying that there is a flaw. They point out that you do not have an exploit. They call your post FUD, and you a shill for the perceived competition. This seems wrong, because you did not have time to short these guys or long the competition, because you were actually thinking about the exploit, while these guys seem to be spending all their time day trading coins.

The people calling you out for FUD are getting aggressive. While your inbox consists 97% of people saying "thank you for what you do," the remaining 3% is full of vitriol and kind of annoying.

[Optional Side Story] You receive an unmarked package in the mail. Not knowing if it's a bomb, you put it on a steel table, crouch, and, holding the scissors with your left hand, open it. As you do this, you curse the day you said anything in public. .... It turns out to be dessert from a fan. You are immediately relieved. It's your favorite kind, too. You gaze at it and laugh at yourself for thinking it was a bomb... You have a jolly good time for 5 minutes, after which you realize that the dessert might be poisoned. You offer it to your colleagues, wondering how much plausible deniability you have if they keel over. [NMD]

In response to FUD allegations, do you:

C1. Ignore them and spend your time on other endeavors instead of building an exploit.

C2. Spend the time building a full exploit.

Someone else writes the exploit for your flaw.

He uses it to steal everyone's money. Everyone lost. You are widely criticized for making the issue public and enabling the theft. [The End]

He does not use it to steal everyone's money. [Go to C2, from his perspective]

You already had a day job. Now you're working for people who never engaged you, paid you, or will compensate you. They will never even be grateful to you. You're basically donating your cycles to a bunch of undeserving people. The guy at the garage with the specialized OBD2 reader won't connect it to your car for less than $15, and here you are, in the highest-tech field, clocking in free hours for someone who is already upset at you for speaking up. You feel bad for being coopted into someone else's broken money making scheme.

What happens next depends on the quality of your exploit.

Your exploit does not work, the bug isn't there. It was the 1% case. Some random other feature made your bug unexploitable, so you look like a fool.

Two years later, the developers go on to build another system, but this time omit the circumstantial feature that kept the bug from being exploited. They lose everyone's money. You feel vindicated, and happy even, which only exacerbates the unease you feel during visits to the family cemetary. [The End]

The bug is present, but you realize it would take too long to develop an exploit. You have better things to do. The developers pretend that it's the 1% case. They are lying, of course.

Two years later, some no-talent security researcher rediscovers the exact same flaw you pointed out. He is unemployed and has the time to develop an exploit. He does not give you any credit. Today, when you google keywords for your own post, his posts come up as the first links. It feels weird. When you see him at a conference, he scampers away. People ask you how this daft guy knows so much about subtleties in your expertise area. [Restart at C2 from his perspective]

Your exploit works, you release it publicly. Your colleagues pull you aside and lecture to you about responsible disclosure. They tell you exactly how you should have run your life, how you have an obligation to your own following, and what they themselves would have done had they ever been in your position, but, you see, it's hard to get out of armchairs.

Someone uses your exploit to steal everyone's money. The devs, which previously called on you to develop an exploit, now attack you for developing an exploit. An FBI agent leaves a message wanting to talk to you, and you cannot tell if it's because you have interesting insights, or because you're a person of interest. Everyone lost. [The End]

Your exploit works, you give it to the developers. What happens next depends on whether the developers are hostile or not.

C2a. The devs are actively hostile, deny that it works. They start peppering you with mumbo jumbo. You have no time for this. The developers suddenly release an extensive patch, mentioning you in a byline, but also say that your exploit never actually worked. Someone who has been watching their code repository duplicates your exploit and steals money from unpatched installations. Everyone loses. [The End]

C2b. The devs are passively hostile, ignore you. After a few weeks, you release your exploit. The aftermath is exactly the same as the case where you release the exploit immediately upon writing it. There is no universally agreed-upon waiting period, nor is a single period suitable for all bugs and all projects. No matter what you do, you will be treated as if you revealed the exploit irresponsibly. [The End]

C2c. The devs are nice people. They acknowledge you, they give you a bounty, and release a patch.

[Optional side story] But at about the same time when you notified the devs of the bug, others heard your exact idea, repackaged it using different words, and now you have to split the bounty. Interestingly, you share the bounty with someone who suggested a way to rewrite the C code that does not impact the produced binary at all -- the devs who were too incompetent to fix the problem are too incompetent to tell who had a material fix and who was just making noise. [NMD]

You feel like you did the right thing. But you took a lot of risk -- there were many paths along the way where this could have turned out differently.

The bounty is a flat $10k. You figure that you put in about 30 hours just in developing the exploit itself. $333/hour is far below your consulting rate, but it seems like a reasonable figure. Yet, like an Uber driver who doesn't realize that the amount he's getting paid per hour doesn't cover the upkeep of his vehicle, you do not realize that you forgot to charge for all the time and effort required to get you to the point where you were able to recognize the error in the first place. When you factor that in, you made around $3/hour. You wonder why there are very few older folks who do what you did.

The exploit would have cost tens to hundreds of millions. You wonder if it would have made more sense to just use the exploit.

Meanwhile, someone else finds a different bug in the same system, and just uses it to steal money. The dev team is perplexed. They know they are nice people, unlike all those other dev teams who deny the existence of bugs and run PR campaigns against the people who call out problems. They know they treated you nicely. They have a Slack channel full of people, unlike everyone else who runs a Slack channel of shills and mobbing agents. They can't understand why more people don't come out and report more bugs.

The security flaw reporting game is completely broken. Empirically, we can see that we have an undeniable problem. People do not come forward, and if we think critically, there is absolutely no reason for them to come forward. There have been so many bad actors that the few people who step up ought to be sainted.

The root cause of the problem is that almost every project I know, some worth many many billions, is being run as if it's Billy Bob's Software Shack and Associated Slack Channel of Speculators and Online Mob.

The bounty programs are identical in nature and amount in cryptocurrencies and all other software. This is insane. One is meant to build something like a banking system that holds billions, the other runs on a machine in someone's den that holds Aunt Beth's pictures.

Many large projects do not even have a Chief Security Officer, whose job is to assess the severity of the flaws independently from the development team. That decoupling is essential, not only because it brings a different pair of eyes to the problem, but decouples the egos from the software artifact and makes the problem scenarios less likely to play out.

Personally, I will soon actively divest from coins that lack a dedicated person in charge of security. I suggest that you all do the same. The current situation is laughable, and the scrutiny it brings to cryptocurrencies is bad for all.

And there are other things we can do collectively: (1) Actively avoid projects that demand exploits before acknowledging problems, or otherwise create friction, (2) Do not fan the flames of online drama involving security flaws, everyone has errors, let people acknowledge and patch their code in peace, and (3) come up with better incentives and payout schedules for bounties, and at least use a sliding scale based on severity.

Time to start demanding better practices from multi-billion dollar software projects, and we need to start behaving better as a crowd.

[The End.]

| [1] | ;-) |