Every single programmer out there is familiar with bloat. It's everywhere: enterprise software that requires the enterprise to change its processes (aka "why do courses at Cornell have 4-digit numbers?"), finance software (of any kind except HFT), javascript frameworks (efforts to reuse left-pad notwithstanding), web backends (hello there django middleware), RDBMSs, OSes, USB drivers, browsers, browser plug-ins, PDF viewers that are actually document publishing systems, phone apps, you name it.

But dev teams don't have "scrum task boards" with post-its attached that say "ADD BLOAT" on them. Iranian sleeper cells aren't submitting pull requests against open source projects (when secret services add backdoors, as they did with Juniper, they seem to do it very elegantly by modifying previous backdoors -- no bloat!). So, how does software get bloated? Who is behind it? What process is at fault?

Found the culprit.

A naive view is that bloat stems from clueless developers who don't quite know what they are doing. We have all written convoluted code, especially when we didn't quite grasp the underlying primitives well enough.

But the bloat in a large, well-funded, significant software project is typically not the result of cluelessness. I think this stems from the simple observation that software productivity follows a Zipf distribution: a large portion of the code is typically contributed by just a few competent programmers, so the clueless do not have that many opportunities to wreak havoc.

In my experience, software bloat almost always comes from smart, often the smartest, devs who are technically the most competent. Couple their abilities with a few narrowly interpreted constraints, a well-intentioned effort to save the day (specifically, to save today at the expense of tomorrow), and voila, we have the following story.

Perhaps the best example of bloat, relayed to me when I was working at a large telco, involves a flagship phone switch. This was a monster switch, capable of running a large metro region with millions of subscribers. It ran Unix at its core, but the OS was just a little sideshow compared to the monstrous signaling protocol implementation, rumored, if I remember correctly, to be about 15M lines of code.

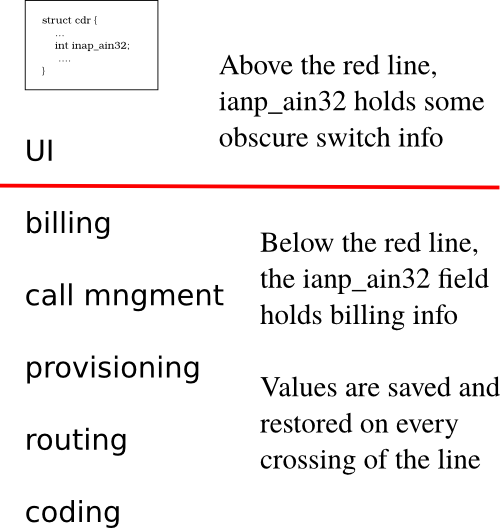

Suppose you're operating on a code base that big, and you want to add a field to a struct. Say, you want to add a field to the call-data-record (CDR) structure to indicate if the called party is on a short list of friends and family. The sensible thing to do would be to go to the struct definition, and insert "uint is_friend_fam:1;" That would add an extra bit to the struct, and then you can do whatever you want throughout the code.

But when the code is that big, and when your downtime limit is 2 hours in 40 years, and some field technician who was replacing a backup power supply back in 1987 pressed the wrong circuit breaker and blew half of your downtime budget in the Chicago area with his fat fingers, you can't just change the size of the struct. Because that might change where CDR structs are allocated, how much extra space there is around the CDR object, and what happens to code that mistakenly writes past the struct. This would have untold, unforeseeable consequences, none of them good.

So the switch developers came up with an absolutely brilliant idea. I'll give you a moment to think about what you'd do in a similar situation. You may assume that the code follows a layered architecture, typical of networking code.

Ok, see if your solution follows the following brilliant solution:

Path to hell is paved with good intentions and clever tricks.

So, you go into the CDR struct definition. Find the field that looks like it's the least important and least used overall, and make sure it's not used at all below your layer in the call stack. Suppose CDR contains something called "uint inap_ain23" that is used solely above your layer. You do not have to have any idea what inap_ain23 is or does. What you do is you save the value stored in inap_ain23 when control flow passes through your layer. So, below your layer, "inap_ain23" is no more. You just "repurposed" it. It is now "is_friend_fam." You may alias it like so "#define is_friend_fam inap_ain23" to make things easy for you. And plus, you got some extra bits! Bonus!

The only thing you need to make sure of is that you place some code to intercept every code path from the layers below to above yours. Because on those paths, you need to stick back whatever value you found in "inap_ain23" prior to when you were called. If you don't do that, the switch will surely break.

So that was bad, but it gets worse. It's pretty much impossible to catch every control path back up. Someone will slip up and leave the friends-and-family bit where the control information ought to be for the database, which can cause a massive crash. So the engineers had a process whose job was to scan through data structures, in the heap of the live system, and check for invariants like "inap_ain23 must contain a port number unless the top bit is 1" and so forth. And when it detected an invariant violation, this process would monkey-patch the data structs as best as it could in an effort to avoid downtime. Let me repeat: they were guessing at what the fields ought to contain, and just patching them.

So, "repurposing" a field seems pretty ugly. You need to save the field to an auxiliary area, perhaps on the stack, perhaps somewhere else on the heap, far away from the precious and inviolable CDR structure, and remember to restore it on every single path back up. You'll incur the performance penalty of two writes on every call and also on every return. Someone has to run a dynamic check through the heap to catch the cases where you slip up.

But this is hardly the disconcerting part of the story. That comes next.

Imagine what happens when this brilliant engineer blabs at lunch about her cool trick of repurposing fields. Imagine what happens at scale.

That didn't take long, did it?

The rumor is that in this switch, on just about every cross-layer function call, some brilliant code would save away and repurpose a field, and on every return, it would restore the old value, like some kind of a weird shell game, where no field in a data structure is ever what it is labeled to be.

And which fields, do you think, would our brilliant engineers settle on for this shell game? Why, of course, that inap_ani23 sure doesn't look that important. Since the brilliant folks ended up repurposing the same, "not very frequently used" fields, it turned out that the most innocuous, least important looking fields in the switch's critical data structures were actually the most important, the ones accessed most heavily.

I was reminded of this telco story as Bitcoin devs pondered whether they should effectively increase the size of a bitcoin block through a "soft-fork" mechanism. I don't want to rehash Bitcoin's soft vs. hard-fork debate here, but some background is in order.

In essence, some Bitcoin devs are considering a trick where they repurpose "anyone can spend" transactions into supporting something called segregated witnesses. To older versions of Bitcoin software deployed in the wild, it looks like someone is throwing cash, literally, into the air in a way where anyone can grab it and make it theirs. Except newer versions of software make sure that only the intended people catch it, if they have the right kind of signature, separated appropriately from the transaction so it can be transmitted, validated and stored, or discarded, independently. Amazingly, the old legacy software that is difficult to change sees that money got thrown into the air and got picked up by someone, while new software knew all along that it could only have been picked up by its intended recipient. It is, by every metric, a very clever idea, and I have tremendous respect for the people who came up with it. Most of my brain feels that this is a brilliant trick, except my deja-vu neurons are screaming with "this is the exact same repurposing trick as in the phone switch." It's just across software versions in a distributed system, as opposed to different layers in a single OS [1].

Keeping clever tricks out of software is impossible, and probably undesirable. But it is crucial to understand the costs, so one can effectively weigh them against the benefits.

As systems get bloated, the effort required to understand and change them grows exponentially. It's hard to get new developers interested in a software project if we force them to not just learn how it works, but also how it got there, because its process of evolution is so critical to the final shape it ended up in. They became engineers precisely because they were no good at history.

If a developer wasn't around to follow through the development of a given piece of software, they will find the final state of the code disagreeable, at odds with how a smart engineer would have designed it had they done it from scratch. So they'll be less likely to commit to and master the platform. This is not good for open source projects, which are constantly in need of more capable developers. Nor is it good for systems where a network effect is crucial to the system's success.

The Bitcoin segwit discussion has been cast in terms of hard versus soft forks, with many reasonable arguments on both sides. I hope this discussion makes it clear that it's not just soft vs. hard forks, but it's also soft forks versus diminished interest from future developers. These kinds of clever tricks incur, not a technical, but a social debt that strictly accrues over time.

My personal stance on the Bitcoin front is that I'm OK with segwit, but this uses up Bitcoin's lifetime allotment of clever hacks -- any additional complexity will relegate Bitcoin into the same category as telco switch code.

Luckily, complexity and bloat are often their own cure: after some threshold, people just cast aside the bloated system and move on to cleaner, more elegant platforms.